While artificial intelligence is still something that many remain wary of, others are ready to embrace it. Since AI has reached new heights in the last few years, game developers have been keen to explore these new possibilities to see how they can streamline work processes and save valuable time that could be invested elsewhere.

But how do these tools actually work? Are they reliable? And the big question is, how should AI be used? Artists have been concerned that generative art could be damaging to their work and should not be used to replace talented artists. In this guest post, Belka Games lead artist Anton Danko shares how artists are just as important as ever and how AI can be used as another tool.

Recent advances in art-generating AI have led to countless debates about whether artists will be put out of work and which other kinds of jobs could get taken over by AI. Fortunately, this alarmist speculation is finally starting to die down a bit. We've come to see that we need artists more than ever, and AI is just another tool in the artist's toolbox, like Photoshop. At Belka Games, we've been experimenting with generative AI for a while now, and we've decided to share some of what we've learned in this article.

The AI Boom

Generative AI was right on the edge of becoming a thing for several years, but in late 2022 it suddenly made a big splash and entered a whole new development phase. Nevertheless, AI still isn't a magic bullet, and it can take much effort to wrangle it into a usable state.

When we decided to see what AI could do for us, we started by testing many different services. We sat through a dozen other presentations about AI art projects like Phygital+ and Scenario.gg. But after the third one, we realised that all these services work more or less the same, and they all have a number of technical limitations that are a big deal for us.

So we spent a couple of months performing a painstaking, multifaceted analysis of AI's potential, as well as legal ramifications, training methods, and compliance with our company's art standards.

Pro tip

When choosing a service, ensure you're confident you'll get consistent results when generating content. Have the developers made it possible to fine-tune AI models? Have they implemented an Inpaint mode? Services that don't give you any control over the stylistic essence of the art they create aren't a good fit for game content pipelines - your artists will have to constantly review the art and touch it up manually, which can end up taking just as long as drawing it from scratch. But you can always use basic services like these to help generate ideas, concepts and marketing banners.

We picked a few different services and tested them all to hell and back. And the winner is... Stable Diffusion! We liked it for several reasons:

1. You can use it to generate content based on your own data sets.

2. It has a lot of control points and can be precisely configured.

3. It allows secondary tools to be developed quickly.

4. It has a wide range of functions.

5. It can be installed locally.

6. You can set up cloud access for remote content generation.

AI isn't a magic wand

You can't just take a generative AI program and build it into your pipeline. Any interference in your existing processes means potential risks for your product.

You need to take things one step at a time:

1. Take a peek under the hood and see how everything works.

2. Learn about what AI can do and how it's trained.

3. Develop a specific plan for integrating AI into your projects' existing processes.

4. Implement it in your projects and provide support to ensure you don't mess up your feature-creation roadmap.

To do this, we put together a small team of people who were really passionate about this and started testing out prompts, models, and embeddings. We tried creating inter-AI links and integrating them into the tools we were already using.

Choosing a Testing Ground

We tested our pipelines for Solitaire Cruise since I was the lead artist on that project until recently. I know SC very well, so it was the easiest place for me to conduct a few experiments and see what happened when we tried integrating AI into our workflow.

Solitaire Cruise has a small art team with sky-high ambitions. We pulled our entire wishlist out of our backlog, picked the ideas our product team thought were the most promising, set our priorities, and started researching the final task list.

Pro tip

Based on our experience, you can delegate the following tasks to AI: making art for LiveOps, location backgrounds, avatars, icons, characters, and art for offers. This can make content generation much more efficient for a casual game, and you can use the work hours you save to make new content.

Running Tests

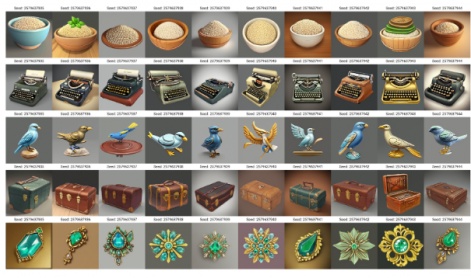

Our first experiment was the most valuable one but also the most expensive one. We trained the AI on over 100 models, including hypernetworks, embeddings, lore, and all its sub-types. We tried many different training combinations until we finally found the right set of parameters. The result was a secret sauce comprising a specific number of images per data set, their contents, their similarities, a number of training steps, the influence of weights, a set of the most effective prompts, and so on. It was a pain, but it was totally worth it. Now it only takes us a few hours to set up a pipeline for a new art stream, and most of that is spent preparing the data set.

While we were figuring out the best way to train the AI, we realised that most of the guides online just weren't specific enough. They don't give you a full understanding of the tool, and sometimes they can even mislead you. For example, model-training guides might talk about what epochs are and how many of them you need to get a flexible model, or they might say something like "set 'gradient accumulation steps' to 2," but they never explain why you should do this or what it even does. There just isn't a lot of valuable information in them, and you can count the useful resources in the game development field on one hand. So we only had one option: do the research ourselves.

During our research, we realised that we had to take a flexible approach to this project to get tangible results, but combining that flexibility with our employees' other responsibilities was pretty much impossible, so we created our own AI department. This department analyses new tools, looks for ways to optimise our projects, trains resources, integrates new AI pipelines into projects, and provides support.

Using AI

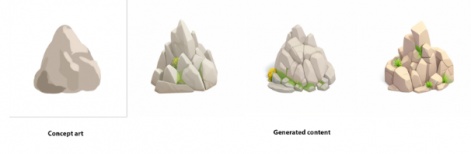

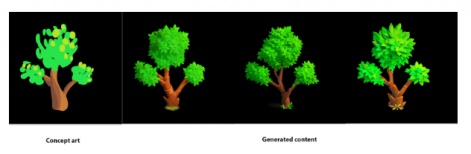

Stable Diffusion has two different ways of interacting with the AI: txt2img (text prompt) and img2img (image + text). Getting the exact asset you want with a text prompt alone is very hard to do - the results can be very unpredictable - so it's better to start with concept art. The more detailed that concept is, the more predictable the results will be. Here's an example of a decorative item we made for Bermuda Adventures using Stable Diffusion and how we trained the model.

Once it's generated, we upscale the image and tweak it until we get the result we're looking for Including the modelling stage, this task would have taken about twice as long using a traditional art pipeline.

Pro tip

If an object is going to be animated or has lots of fine details, you're better off splitting it up into parts and generating each part separately.

The AI can figure out the location of objects like plants and rocks pretty much right away. You don't even need concept art for this stuff - a basic sketch and a text description will do the trick.

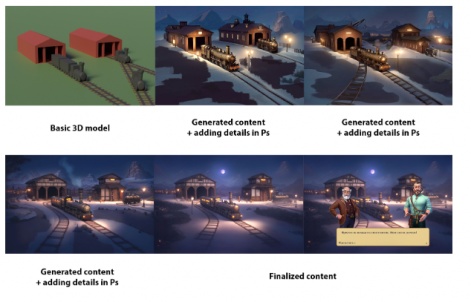

How long to do you think it would take to model all the objects for a single event? You can also enter a basic 3D model instead of a sketch.

Here's an example of a background we generated for an event in Clockmaker.

Pro tip

Using a basic 3D model and a little denoising (up to 0.4) and/or ControlNet, you can set an exact angle, shape, interior composition, etc.

The Bottom Line

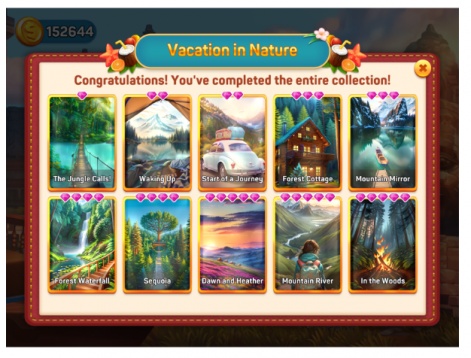

The benefits of integrating AI into your art workflow can be demonstrated by looking at the process of developing collectable cards for Solitaire Cruise. Right now it takes about three work-hours to create a collection of ten cards using AI.

If we do it the old-fashioned way (concept + 2D render), it takes about 240 work-hours to make a collection of ten cards. But the difference doesn't even stop there. While testing out new pipelines, we realised that we could save even more time if we polished our team's processes a little.

Integrating AI

The art department isn't the only team that can use AI in their workflow - adjacent departments can do it too. Think about narrative teams or those working in the games design - have these teams considered everything AI can do to help them produce content more efficiently?

We use AI in all of our art pipelines now. Our model and pipeline base is growing by the day, and our workflow is getting better and simpler all the time. We're constantly learning about new tools that are further streamlining our approach to art creation.

Since we only make casual games, in addition to implementing AI, we're also working on unifying models and pipelines, which is increasing the quality of our output and helping us integrate AI into our company's workflow more efficiently.

Edited By Paige Cook