Guest post from Bob Gazda, senior director of engineering at InterDigital

Video gaming is a demanding technology ecosystem. Major leaps in gaming performance and experiences have been made in large part because of broader advances in computing horsepower and video processing technology.

In consoles, for example, we've come a long way from the 8-bit microprocessor found in the Atari 2600 in the late 1970s - which rendered best in black-and-white on a CRT television. Almost quaint by today's standards, that early Atari console represented the state-of-the-art at the time, and helped pave the way for video gaming to become a valuable slice of the home entertainment market – even though it sold just 400,000 consoles when it launched in 1977 . Our world has since changed.

Today, there are more than 2 billion video gamers in the world, and even average consoles boast advanced octa-core processors and ultra-high-definition graphic output up to 8K resolution. Sony, for example, reported sales of 1.5 million PlayStation consoles in just in the first quarter of 2020. Gaming is big business and that trend is projected to accelerate. A recent paper by ABI Research, commissioned by InterDigital, highlights this trend, with a specific focus on the emerging world of cloud gaming.

The computing horsepower onboard the next-gen gaming consoles, alongside their constantly evolving PC counterparts, will be capable of handling complex functions like ray tracing, which will enable more realistic experiences in VR and AR games. That said, the console and PC gaming paradigm is still rooted in a traditional architecture model.

Cloud gaming will change all of that. As more complex games become cloud-based, the gaming industry will help showcase some of the newest edge computing infrastructure models, which will be required for immersive and interactive games. These new architectures will be able to achieve levels of realism in games that haven't been possible before, even on the most advanced consoles. In essence, edge computing takes all the best elements of cloud computing and brings them closer to the user, so that game experiences on a phone or on the go with light-weight XR glasses can be as rich as they are on a console - but ultimately more powerful.

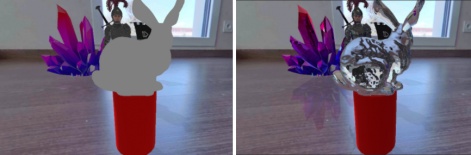

A critique of AR games is that the graphics can look cartoonish at times, primarily due to the complexity in graphics and video rendering. For example, raytracing is a technique of matching the lighting conditions in an environment, with the goal of making virtual objects look real. Raytracing is important in AR and XR games, where the game must match the lighting conditions of virtual objects being rendered in the game with the lighting conditions in the real, physical environment of the player.

Intensive processing

While it sounds simple, raytracing is a very computationally complex and processing-intensive operation that requires a lot of computing power and very capable GPUs. While raytracing can be realised on some next-gen gaming consoles and gaming-level PCs, its processing complexity and required hardware support is still too advanced to be realised on a mobile device or today’s wireless AR headsets.

Raytracing is a technology where cloud gaming models, enhanced with edge computing, will come into play. When gaming is offered as a cloud service, the game distributor can offload, from the end-user device, the advanced and computationally intensive ray tracing processing to the network edge, which will be capable of housing much more advanced processing power than a mobile phone or an AR headset. The edge is uniquely positioned to provide the required processing complexity, within the low-latency constraints of XR and interactive gaming, which cannot be satisfied by the cloud alone.

The computational intensity of raytracing is one thing, and the associated rendering is where other challenges come in. Depending on the game, the user’s location and context and the demands of the various networking and compute components in the system, once the raytracing compute is done at the edge node, the game could then do the final object rendering on the user device - a sort of "hybrid" rendering model. Alternatively, both the ray tracing and the rendering could be done in the edge, and a fully rendered game would then be delivered to the device.

The reasons for these variations in approach largely depend upon how sensitive the particular game is to certain factors, what device it would be delivered to, and the current network latency and bandwidth conditions. Some games, for instance, are going to be more sensitive to latency or jitter than others, meaning that more latency-sensitive cloud games will need to be closer to the edge of the network. At the edge, it will require fewer network hops, and therefore less latency and jitter, to deliver the game to the user.

Latest in latency

When talking about latency in a gaming context, it is important to note that generally, the gaming experience is better for the user if the latency is consistent throughout the experience. As outlined earlier, some games are more sensitive to latency than others. When a cloud-native or edge-native game can be designed to account for the latency that will be expected in its delivery, the user will enjoy a more consistent pace of the gameplay and the experience should feel more natural to them.

Latency is experienced by the user as a single phenomenon, but it is actually comprised of several different parts. There is network latency, video rendering latency, latency in gameplay logic calculations and physics, and even latency in video capture – for example in an AR or XR game context. The sum of all these results in the end-to-end latency that the user experiences. New network architecture models, such as edge, with game design patterns, will help address all of these various types of latency and optimise the user experience for different types of games, depending on the needs of each one.

In essence, edge-native games will emerge. Edge-native applications, including games, are applications or use-cases that could not exist without edge architectures. In comparison consider traditional cloud gaming, where we simply take a console game and run it from the cloud, the game itself is essentially the same. It's just delivered without the need for a console.

Edge-native games, on the other hand, cannot exist without edge computing architecture, going beyond just enhances to graphics and video. Edge computing will enable different types of gameplay - with higher quality and value for the gamer - that simply don't exist today. It's not simply about putting Call of Duty onto the edge, or enhancing Fortnite with even more realistic graphics. Edge computing will open up entirely new options, bringing AR and mixed reality into game development, and change the way games are both designed and experienced in the future.